Decentralisation in the blockchain space

Balázs Bodó, University of Amsterdam

Jaya Klara Brekke, Durham University

Jaap-Henk Hoepman, Radboud University

The rapidly evolving blockchain technology space has put decentralisation back into the focus of the design of techno-social systems, and the role of decentralised technological infrastructures in achieving particular social, economic, or political goals. In this entry we address how blockchains and distributed ledgers think about decentralisation.

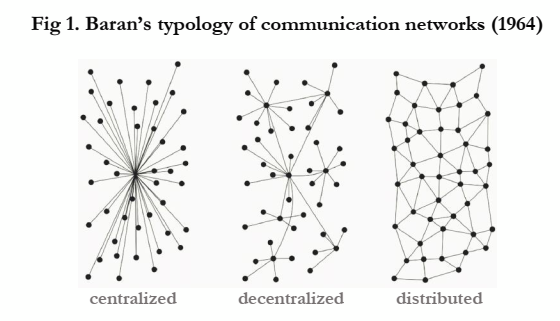

Decentralised network topologies

A network is made of nodes, and edges, or interconnections between the members of the network. In a distributed network every node has roughly the same number of edges, and there are more than one routes in which nodes can connect with each other. This means that the topology of the network does not contain nodes in central or privileged positions, or if there are hierarchies built into the network, each node belongs to more than one hierarchy. This gives distributed networks a special property: the failure of a few nodes (even if they are chosen on purpose) still leaves the network connected, allowing all nodes to communicate with each other (albeit over a possibly much longer path than in the original network). In mathematics (and computer science) Graph Theory is devoted to the study of networks and their properties (Bondy and Murty, 2008).

Figure 1: Various network topologies (Baran, 1964).

Though often used as synonyms, decentralised and distributed networks are not the same. Decentralised networks are built from a hierarchy of nodes, and nodes at the bottom of the hierarchy have only a single connection to the network. Failure of a few nodes in a decentralised network still leaves several connected components of nodes that will be able to communicate with each other (but not with nodes in a different component).

The degree of decentralisation and distributedness varies from network by network. In general networks that are more distributed are more resilient to the failure of individual nodes or loss of connection between them. This resilience applies to both concrete and virtual networks i.e physical network infrastructures (such as the routers, cables, backbones, WIFI hotspots of the internet), and virtual networks running on the physical layer, such as blockchain networks, or file sharing networks.

Initially designed to be a cold-war resilient distributed network, the internet is in fact a decentralised network. Consequently, there are multiple stakeholders, and multiple physical as well as virtual bottlenecks where the network is controllable, or vulnerable to surveillance, and failure (Forte et al., 2016; Kaiser, 2019; Kastelein, 2016; Snowden, 2019). Likewise, while the TCP/IP protocol envisaged a network in which each node (user, machine) could be both an information sender and receiver, in practice, highly centralised virtual networks emerged in knowledge production, communication, or commerce. The recent wave of re-decentralisation (Redecentralize.org, 2020) tries to address the centralisation of the virtual layers - often assuming this will lead to decentralisation in other dimensions including power and political control (Buterin, 2017).

Advantages and disadvantages of decentralisation

Different network topologies come with particular advantages and disadvantages, that vary with the degree of centralisation, and the ways networks become more or less distributed over time. Distributed networks are more resilient to failure but incur a cost to maintain coordination. Centralised networks are much easier to maintain, but the central node can be a performance bottleneck and a single point of failure.

In the following table we summarised the main costs and benefits associated with distributed and centralised networks.

|

Costs |

Benefits |

|

|---|---|---|

|

Distributed |

Costs of maintaining individual nodes (security, connectivity, bandwidth, etc) Cost of network coordination | Higher resilienceLack of nodes with unilateral control power |

|

Centralised |

Central nodes can unilaterally set the conditions for using the networkLower resilience of the network, in particular the vulnerability of the network to the failure of the central nodes. | Higher efficiencyLower cost of coordination |

In distributed networks, each node has a wide range of responsibilities and associated costs. A distributed network is only operational if there is a coordination mechanism between the nodes.

In the absence of robust solutions to the problems of coordination and fault tolerance, Lamport et al (2019) have noted, a distributed system is only a network “in which the failure of a computer you didn't even know existed can render your own computer unusable''.

Coordination problems must address, for example, how nodes reach each other (as in the internet routing system); how to deal with competition and race conditions (when multiple nodes want to use the same limited resource, such as a network printer); or how the system’s operational and development processes are governed (Katzenbach and Ulbricht, 2019). While many of the more common technical coordination issues in distributed networks have been addressed and have robust solutions, governance, which might be equally distributed, remains experimental (Arruñada and Garicano, 2018; Atzori, 2017; De Filippi and Loveluck, 2016a). Most of the distributed applications and services have bare-bones, generic governance frameworks. Governance, however, entails more than, for example, an infrastructure of secure voting. Effective participation in the governance mechanisms of a distributed social, political, economic system also requires substantive investment from the individual in terms of knowledge, time, attention, engagement.

The problem of fault-tolerance has to do with failures and attacks[1], and ensures that the overall network remains functional and continues to work to achieve its overarching goal while some of its components fail. Attacks that are particular to distributed and decentralised systems include DDoS (Distributed Denial of Service)[2] and Sybil attacks[3]. Distributed architectures are designed to be tolerant of the failure of a relatively high number (typically 30-50%) of all nodes in a network[4]. But Troncoso et al. (2017) also showed that decentralisation, done naively, may multiply the ‘attack vectors’, and security risks, not least the breach of privacy. Distributed architectures might also be worse in terms of availability and information integrity, as the failure of nodes may have a fundamental impact on these properties.

In distributed networks, individual nodes must also take care of their own security, and availability. Distributed networks also have issues with efficiency, such as the transaction throughput of blockchain systems, or the bandwidth and latency in the TOR routing network.

In return, when done right, distributed networks offer higher resilience. There is also a lower risk of any central actors taking control, or exercising unilateral power over the network. For this reason, decentralised network topologies are also used to achieve privacy, censorship resistance, availability, and information integrity information security properties (Hoepman, 2014; Troncoso et al., 2017b).

In centralised systems coordination is taken care of by central actors who can specialise, and this leads to efficiency gains. There are costs to this, however, including making the network more vulnerable to the failure, or the abusive behaviour of that central node. Since network transactions run through a specific server, this grants those who control that server significant powers to observe, manipulate or cut off traffic (Troncoso et al., 2017), as well as to control, censor, tax, limit or boost particular social interactions, economic transactions, information exchange among network participants, and unilaterally set the conditions of interactions within the network.

To illustrate this cost-benefit calculus consider the privacy protecting TOR network. TOR is able to give reasonable levels of privacy at the cost of using a distributed network to route messages with lower speeds, and larger latency. These costs are seemingly too large for everyday users who are willing to settle for lower levels of privacy. On the other hand, for political dissidents who fear government retribution, journalists, whose integrity depends on their ability to protect their sources, and other groups for whom strong privacy is essential, the cost-benefit analysis justifies the higher costs of using this distributed network.

Both the costs and the benefits of using distributed network topologies are dynamic in nature, and are heavily dependent on factors both internal and external to the network. For example, the unresolved problem of distributed governance often creates a certain structurelessness in the social, political dimensions of distributed networks. As Freeman (1972) or De Filippi and Loveluck (2016) pointed out, seemingly unstructured social networks risk informal centralisation of their governance. In fact, blockchain networks have highly centralised forms of governance. (Azouvi, Maller, and Meiklejohn, 2018; De Filippi, 2019; De Filippi and Loveluck, 2016b; Musiani, Mallard, and Méadel, 2017; Reijers, O’Brolcháin, and Haynes, 2016). Blockchain networks may also suffer from centralisation in other dimensions of power. For instance, the proof-of-work (PoW) protocol randomly assigns a miner node to validate the latest batch of transactions for a relatively large reward to minimize the risk of a malicious miner hijacking the transaction ledger. The corresponding low chance of being rewarded for being honest forced miners to aggregate into a handful of coordinated mining pools, which control the vast share of this critical resource in an otherwise physically, geographically distributed network. The alternative approach, proof-of-stake (PoS) requires that those who wish to validate transactions stake their decisions with hard (crypto)cash: the larger the stake, the larger the validating power. PoS may remove mining pools, but creates another form of centralised power, namely that of capital. On the other hand, the increasing legal pressure on P2P file sharing networks, in particular on central nodes, pushed these projects towards increasingly distributed architectures, such as bittorrent networks, with distributed hash tables (Giblin, 2011).

Distributed systems in practice

While distributedness, as we have noted earlier, have been proposed as a general template for both the physical and the virtual digital networks, truly distributed networks only established themselves in particular niche applications, due to their particular cost-benefit balance.

P2P systems: P2P networks collectively make a resource (computation, storage) available among all nodes in the network. Examples of peer-to-peer computation networks are Seti@Home[5] and Folding@home[6]. Napster, Kazaa, or the bittorrent networks are peer-to-peer storage and file sharing networks, used to distribute copyrighted works under conditions of limited legal access (Johns, 2010; Patry, 2009). The peer-to-peer nature of these networks made it much harder to censor them and to take down material that infringed on copyrights (Buford, Yu, and Lua, 2009).

Distributed ledgers are distributed data structures where a set of bookkeeping nodes (sometimes called miners), interconnected by a peer-to-peer network, collectively maintain a global state without centralised control (Narayanan et al., 2016). Bitcoin (Nakamoto, 2008) was the first distributed ledger, inventing blockchain as the data structure to store transaction histories of digital tokens capable of digitally representing units of value. Ethereum generalised the distributed ledger from recording transactions to instead process code and store the state of the network. Bookkeeping nodes maintain consensus on the list of executed transactions and their effect on the global state, as long as a specified fraction of the bookkeeping nodes is honest and active.

Secure multiparty computation allows several participants to collectively compute a common output, which is based on each of their private inputs. Instead of sending the private inputs to one central coordinator (that would therefore learn the values of all private inputs), the algorithm to compute the value is distributed and the computation is done on the devices of the participants themselves, thus ensuring that their inputs remain private (Cramer, Damgard, and Nielsen, 2015; Yao, 1982).

Decentralisation as a social template

Distributed networks have brought experimentation with new coordination mechanisms, new ways to manage risks, and failures, lowering transaction costs and removing central powerful positions in technical terms. Proponents of disintermediation hope that these same logics provide new tools for horizontal social coordination, and the removal of political, economic, or social intermediary institutions, previously fulfilling those tasks.

The centralisation/decentralisation dichotomy is often framed in terms of power asymmetries, where distributed architectures are proposed as an alternative to authoritarian, coercive forms of political power. This dichotomy rests on a number of assumptions about power, and often does not fully account for the ways that, in practice, decentralisation in one dimension might produce or be enabled by centralisation in another. In terms of economics, distributed digital networks often align with the concept of perfectly competitive markets, designed to prevent the emergence of entities in a monopoly position, whether information, resource, or other monopoly (Brekke, 2020). Yet in practice, markets tend to rely heavily on a regulatory body to ensure fair competition. Distributed ledger technologies (DLT) have also offered a possible technical solution to the loss of trust in institutional actors (Bodó, 2020), by setting up networks with little reliance on trusted third parties, and minimising the need to have trust in interpersonal relations (Werbach, 2018). Yet in practice, DLT brings along new kinds of intermediaries, from interface designers and wallet developers, to exchanges, miners, full nodes and core developers, therefore requiring new forms of accountability methods.

The recent popularity of distributed technical networks raised important questions about the preferred modes of social, political, or economic organisation. Digital innovation changes the costs and benefits of coordination and collaboration (Benkler, 2006). This highlights questions about the roles that intermediaries play in those relations (Sen and King, 2003). For example, cryptocurrency technology may have successfully demonstrated that there is no need for a centralised intermediary to keep accounts, or even run an asset exchange. However, that is not the only function of banks and exchanges. Trust generation, due diligence, risk assessment, conflict resolution, rules provision, accountability, insurance, protection, stability, continuity, and education are arguably also core functions of the banking system, offered in conjunction with the bookkeeping function. A second set of questions address the various layers which constitute a complex techno-social system, and the fact that a distributed topology at one layer, may not produce, require, or allow a distributed form of organisation at the other. In fact, often highly centralised governance is a precondition of a distributed system to function, as is currently the case in blockchain based systems. Another example would be the role of governments to ensure fair and open competition on various markets, such as anti-trust regulation, or in politics.

Conclusion

Decentralised and distributed modes of organisation are well defined in computer science discourses and denote a particular network topology. Even there, they can be understood either as an engineering principle, a design aim, or an aspirational claim. In the decentralisation discourse these three dimensions are often conflated without merit. A decentralised network design might not produce decentralising effects and might not either necessarily be decentralised in its actual deployment.

When the technical decentralisation discourse starts to include social, political, or economic dimensions, the risk of confusion may be even larger, and the potential harms of mistaking a distributed system for something it is not, even more dangerous. Individual autonomy, the reduction of power asymmetries, the elimination of market monopolies, direct involvement in decision making, solidarity among members of voluntary associations are eternal human ambitions. It is unclear whether such aims can now suddenly be achieved by particular engineering solutions. An uncritical view on decentralisation as an omnipotent organisational template may crowd out alternative approaches to creating resilient, trustworthy, equitable, fault resistant technical, social, political or economic modes of organisation.

References

Arruñada, Benito, and Luis Garicano. 2018. “Blockchain : The Birth of Decentralized Governance.”

Bondy, J. A., and U. S. R. Murty. 2008. Graph Theory. New York: Springer.

Freeman, Jo. 1972. “The Tyranny of Structurelessness.” Berkeley Journal of Sociology 151–64.

Kaiser, Brittany. 2019. Targeted. New York: HarperCollins.

Nakamoto, Satoshi. 2008. “Bitcoin: A Peer-to-Peer Electronic Cash System.” Www.Bitcoin.Org.

Patry, William F. 2009. Moral Panics and the Copyright Wars. New York, NY: Oxford University Press.

Snowden, Edward. 2019. Permanent Record. Metropolitan Books.

Werbach, Kevin. 2018. The Blockchain and the New Architecture of Trust. Cambridge, MA: Mit Press.

Yao, Andrew C. 1982. “Protocols for Secure Computations.” Pp. 160–64 in. IEEE.

-

Failure can mean multiple things: the unavailability of a node; the unreliable, unexpected, or unaccounted for behaviour; and any malicious, manipulative or destructive behaviour. Failures can happen for a number of reasons: stochastic processes which may equally affect any node in a network due to their intrinsic properties; failures in some of the underlying layers: energy failures, environmental force majeure; as well as failures due to attacks by malicious actors. ↑

-

A DDoS attack is when the bandwidth of a network is overloaded by flooding it with traffic coming from a distributed set of nodes. ↑

-

A Sybil attack is when some actor/s create/s many nodes such that the network seems distributed, when in actual fact it might be controlled by a single or small set of actors. ↑

-

The so-called Byzantine Agreement protocols allow a system to agree on a common output even if at most one-third of the members are faulty (in the Byzantine sense, meaning that they are malicious) (Lamport, Shostak, and Pease, 2019). But this is only the case under certain conditions. In particular, fully asynchronous systems (where there is no bound on the time it can take for a message to arrive or the time a node may take to complete a step) defy solutions to the Byzantine Agreement problem (Fischer, Lynch, and Paterson, 1985). This highly theoretical line of research re-emerged with the birth of Bitcoin and the subsequent explosion of distributed ledger technologies that exactly needed what Byzantine Agreement offered: reaching agreement on the global order of transactions, when faced with potentially malicious adversaries. ↑

-

Started in 1999, it’s aim is detecting intelligent life outside Earth, see https://setiathome.berkeley.edu ↑

-

Started in 2020, it’s aim is to simulate protein dynamics, see https://foldingathome.org ↑

Martin Florian

PUBLISHED ON: 2 December, 2020 - 16:14

A very well-written and thought-through piece! I absolutely agree with the analysis and conclusions.

I would object to the distributed/decentralized distinction, however. It doesn't seem to me that Baran's classification has aged pretty well, or in any case it seems to me that the line it draws between "decentralized" and "distributed" adds more confusion than it adds benefits.

If you consider graph theory - you have the concept of centrality there, and different approaches to measure it. Given a plausible centrality metric for nodes (e.g., betweenness-centrality, PageRank, ...), we would tend to call a network (== graph) centralized if some of its nodes have high centrality compared to others. Why shouldn't it be right to call such a network *de*centralized to the extent that nodes have mostly similar centrality scores?

On the other hand, "distributed" often just means "involving more than one entity". Centralized networks (e.g., as implied by client/server architectures) are also distributed systems! My smartphone offloading computations to the Google cloud is a form of distributed computing. You could even say that "distributed network" is in a way a redundant term - as a network inherently involves more than one entity and is therefore inherently a "distributed thing".

Another thing, more of an idea: The views voiced by Moxie Marlinspike (and perhaps others?) on the merits of *centralized* systems could also be interesting in the context of this piece (I know of his blog post [1] and at least one talk of him [2] on the topic). More specifically his argument that we can get many features we hope to get from decentralization by making centralized systems transparent and centralized system operators easy to replace (e.g., by releasing all code they run as open source). He is also very good at pointing out pain points w.r.t. the development and user experience of more decentralized systems.

[1] https://signal.org/blog/the-ecosystem-is-moving/

[2] https://www.youtube.com/watch?v=Nj3YFprqAr8 (at 36C3)

(I'm pretty sure I submitted this comment yesterday but it somehow disappeared again? Anyway, this is a repost then.)

Florian Idelberger

PUBLISHED ON: 10 December, 2020 - 12:09

Thank you for the nice summary on the topic. I like that you start with a general overview between the different types of networks. Particularly concerning blockchain, you might be able to use as an example of decentralization that even if a centrally hosted user interface of a blockchain project goes away, contracts on chain are still useable.

After first distinguishing between centralized/decentralized/distributed, in the table you then only compare distributed vs centralized, is distributed here equated as decentralized, or meant as being on a spectrum?

"the proof-of-work (PoW) protocol randomly assigns a miner node to validate the latest batch of transactions"

As far as I understand it, this process cannot be described as random. There is a competition between miners, and thus not random as probability is determined by resources that a miner has available and other factors.

"The corresponding low chance of being rewarded for being honest forced miners to aggregate into a handful of coordinated mining pools,"

I'd argue that this is not the only thing that forced them, but also unequal distribution of cheap power and hardware.

While distributedness, as we have noted earlier, have been proposed

> ...has been proposed

What do you mean by physical digital network? As in the actual cables and so on, on which virtual networks are built? In this case, it might make sense to refer to the 7 layers of the OSI model.

Maybe in ‘distributed systems in practice’, it could be made clearer when a new part starts or that it does not only deal with blockchain, then the selection of systems makes more sense in my opinion.

@Martin Florian - great idea about Moxie. I think no one is disputing that centralization has benefits, but his views are also controversial. Just because all is open source for example does not mean sth can be easily replaced, it can still be hard or basically impossible to do that, due to network effects or just pure resource constraints. Moxie / Signal is a good example of that, as in the beginning the code was already open, but it was basically impossible to use in a way to access the same network.